n-Dame_Heritage

n-Dimensional analysis and memorisation ecosystem

for building cathedrals of knowledge in Heritage Science

n-Dame_Heritage

n-Dimensional analysis and memorisation ecosystem

for building cathedrals of knowledge in Heritage Science

methodology

Work at the interface of material objects, knowledge domains, research objectives, disciplinary areas

To work with data, we collaborate closely with data producers to highlight and preserve the intimate relationships between material objects, knowledge domains, and research objectives.

This table shows how we organise our collaborative work. Instead of simply archiving texts or drawings, we build a corpus of scientific resources deeply connected to the social contexts that generate it. This includes restoration data, such as diagnostics from architects, alongside research on materials. Other researchers investigate medieval timber techniques, analyzing how and when wood and metal were used and preserved, while others reconstruct and date structural remains, combining archaeology, physics, chemistry, and engineering.

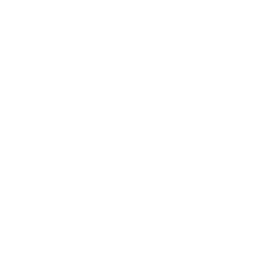

While some themes focus on effective and sometimes urgent restoration needs, such as structural integrity, others address broader questions on materials and structures, for example the climate knowledge derived from centuries-old remains. A good example of this approach is the timber frames. On the screen, you can see the digital reconstruction created by our team, based on data about their state before the fire. This model served as a key piece of the digital documentation and the collaborative work.

First, this model integrates information coming from multiple resources : thousands of photographs, traditional and digital surveys, and the digitisation of charred timber remains from the vaults. But the same model serves several purposes: it provided essential information for architects working on the restoration and supports the wood working group in identifying and digitising elements stored in reserves.

The model is used to precisely document and link the various wooden elements being studied. Additionally, the reconstruction helps verify the spatial location of these elements in the original structure, confirming or challenging hypotheses about the remains. It also accurately locates dendrochronological data, essential for dating the wood and determining its provenance.

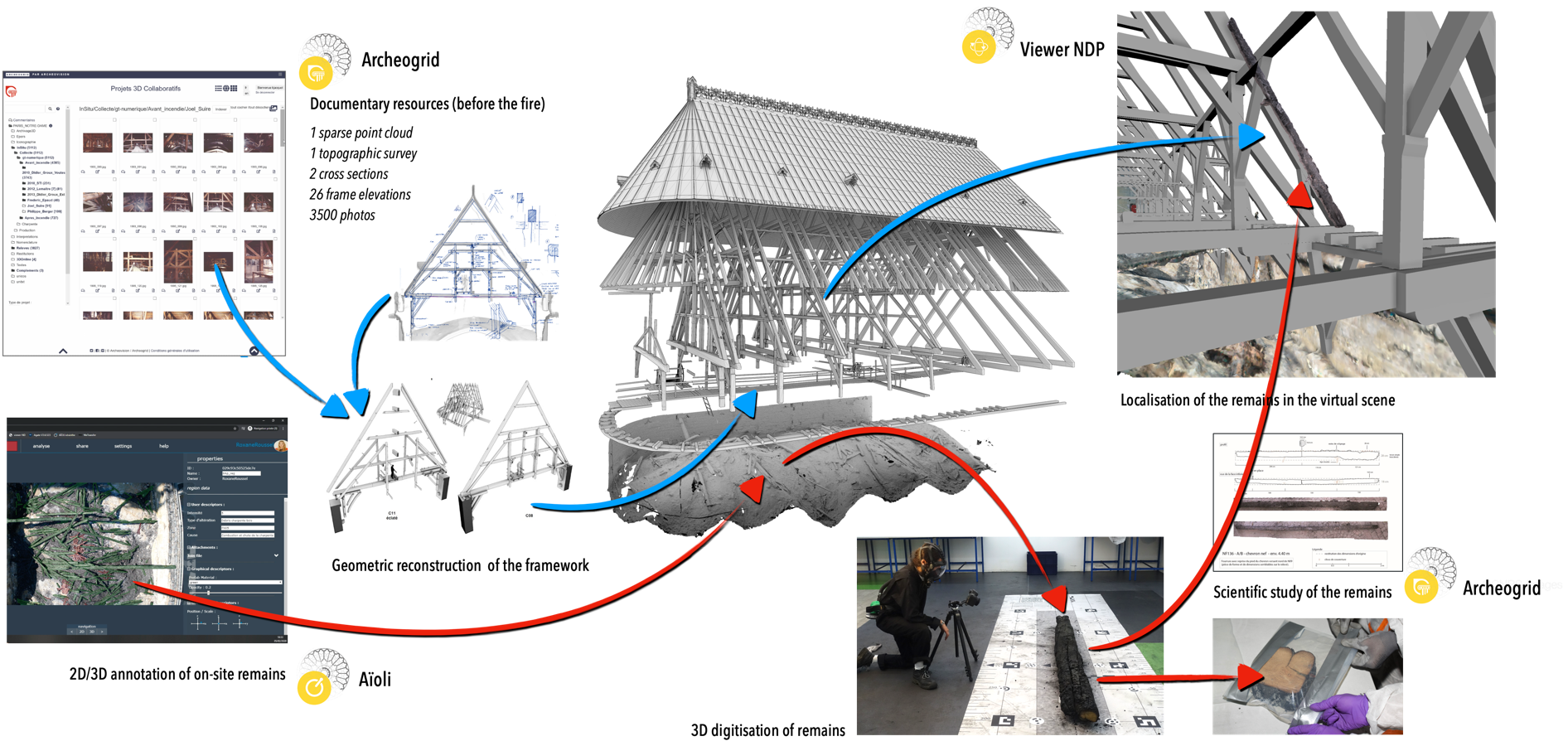

In implementing our methodology, we face several key challenges related to data structuring, semantic enrichment, and knowledge models. These challenges span a complex, multi-dimensional space. Data acquisition and provenance are critical to ensuring that information is accurately sourced and traceable, especially as we integrate data from dozens of actors, various disciplines and time periods. We must also address space and time, capturing both the physical and historical transformations of the cathedral, while also linking material remains to their broader contexts. Our datasets also record scientific activities in the cathedral or in the lab. Form is another challenge, as we work with various digital representations like 3D models, photographes and drawings at various scales. Ensuring consistency across these formats is essential. Additionally, we manage vocabulary—the terms we use to describe objects and processes—collecting and organizing them to interlink multiple disciplines. Finally, integrating knowledge domains and research protocols requires formalise diverse methodologies into a unified and declinable descriptive framework.

This figure illustrate our methodology for the production of semantic-enriched data with a practical example. The image on the left is a photograph of the timber frames taken before the fire. This image is documented with metadata, providing details about when and where it was taken. Next, the photograph is spatialized within a 3D hypothetical model of the timber structure, which is then aligned with a 3D scan of the frames after the fire, representing different temporal states within a diachronic representation for a specific element, like the tie beam (or entrait in French), we now have two representations—before and after the fire. This element is connected to terminological labels describing the structural elements of the frames. Moreover, the tie beam is studied through dendrochronology (a knowledge domain), applying research protocols to date the wood, trace its provenance, and estimate the size of the tree.

The key feature of our approach is built within the relationships between these dimensions, allowing cross-fertilization of data. Semantic attributs from a dimension can inform other dimensions, as well as insights from a knowledge domain, can inform others.

Challenges in multi-temporal digitisation

The first challenge of our approach is translating the material object into digital data. This process goes beyond creating a simple 3D scan of the cathedral. It involves building a comprehensive framework capable of integrating multiple scans at different scales—from individual architectural elements to the broader urban context of the monument—and at different moments in time.

A key step was developing a robust alignment strategy to compare these different temporal states. The method introduced is based on an adaptative Iterative Cloisest Point focusing on collections of architectural and structural elements, such as pillars. These method allowed us to aligning hundreds of scans and thousands of photographs from various periods, and track changes in the cathedral's structure with high precision. For example, we provided the architects in chief precise comparisons of the vaults before and after the fire, for accurately analysing deformations caused by the disaster.

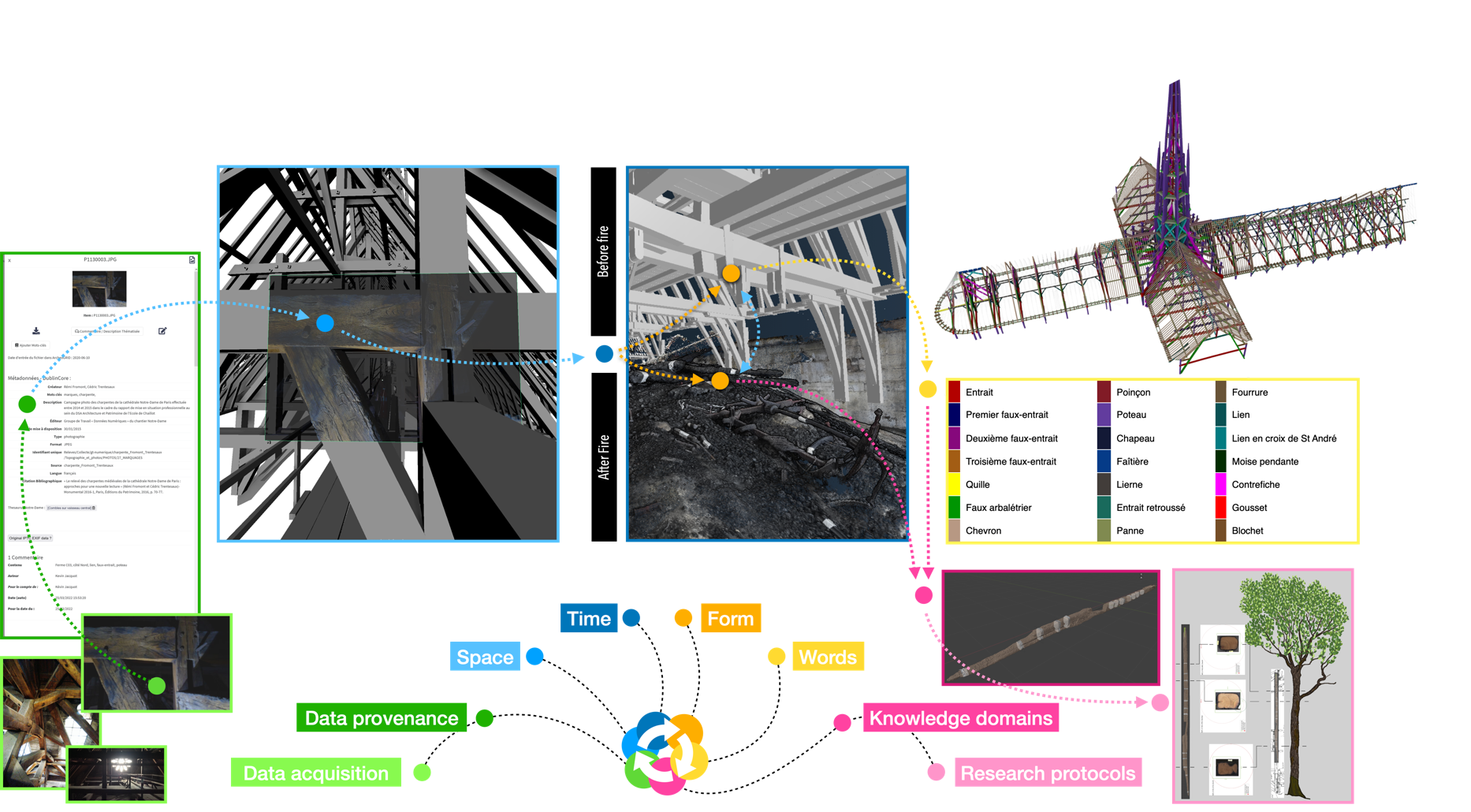

Another key applications of our methodology was monitoring the recovery of remains after the fire. Using a custom cable-mounted camera system, we were able to capture a precise cartographic record of each fragment's exact location in space.

As shown in the images at the bottom of the slide, we documented the different phases of the recovery process—before, during, and after the remains was removed. Anyway, this method, developed in a urgency contest, helped to register the state of the debris in specific time slots by producing an important document used for scientific observations in further applications.

Challenges in massive digitisation of remains

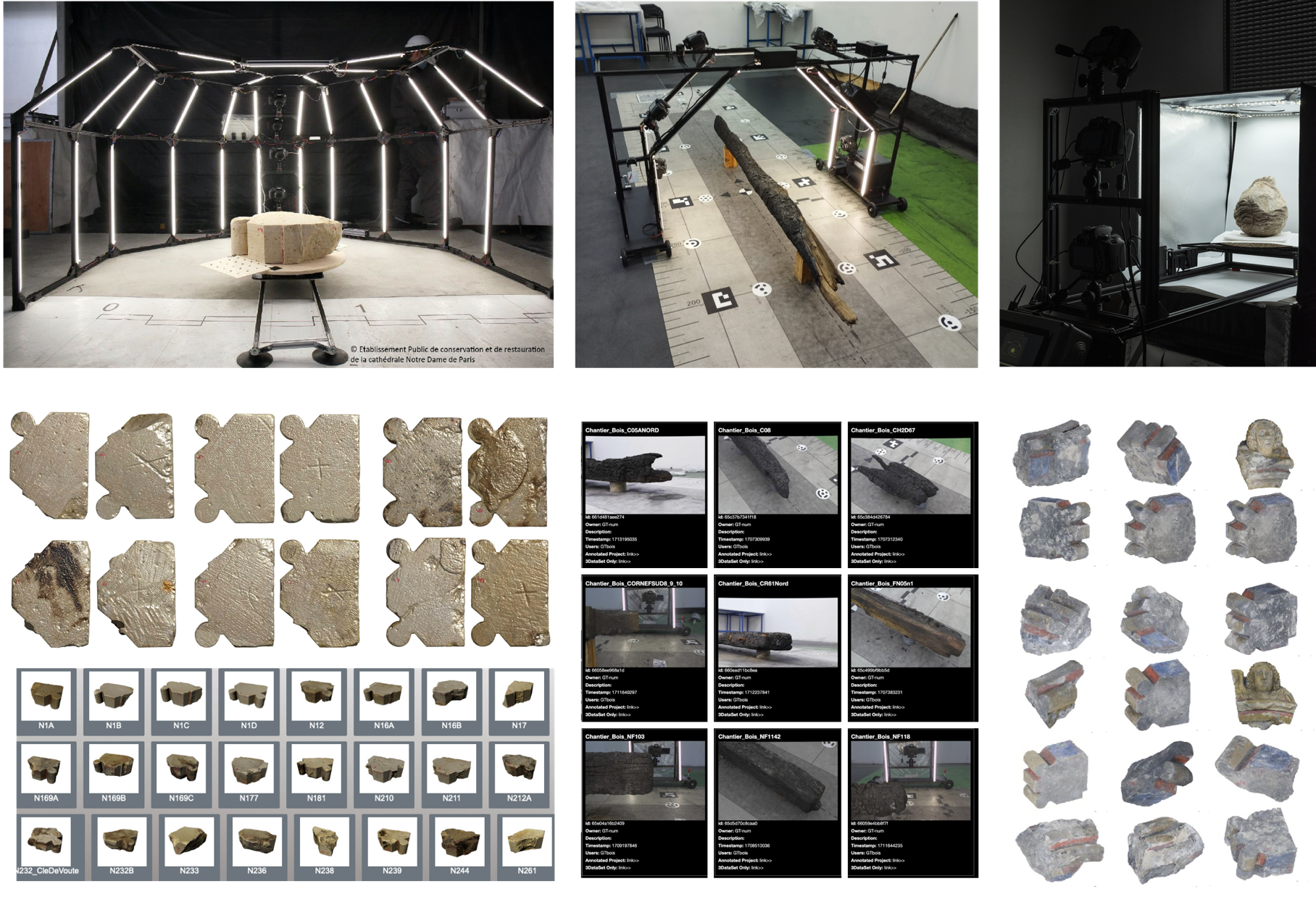

A second aspect of digitization focused on the scale of the remains. Our advancements involved introducing ad hoc solutions for digitizing large corpora of elements. This process was not merely about digitizing objects, but entire collections with harmonized acquisition and processing procedures to obtain homogeneous and comparable data, essential for fine comparative analyses (for example, tool marks, surface characteristics, and materials). These digitizations are not conducted to accumulate data but to support specific scientific inquiries or understanding challenges. The solutions employed are not off-the-shelf tools but devices designed according to analytical requirements. Our digitization collection includes hundreds of elements—and we will likely reach over 1000—with each acquisition based on hundreds of photographs to create highly precise 3D models, which can be reused for future studies.

This figure presents some examples of our setups: devices for scanning large fragments like voussoirs, charred wooden pieces, and smaller archaeological elements like metal or stone. These digitizations form not just a database but a long-term scientific archive that supports future studies, especially when we’ll open the corpus to the scientific community, starting from 2025.

Our progress in digitization have also extended to documenting on-site activities, with a method to document and preserve information about acquired data. The QR code visible on the acquisition set links to a documentation structure that records and stores information about the provenance of the data: who, where, when, what, how, and why a dataset was produced. We connect these provenance data to data processing chains that produce 3D digitization and organize the dataset into a file structure for various uses and publication formats, then for long-term archiving.

This method integrates provenance throughout the entire processing chain, from photogrammetric acquisition to 3D reconstruction, directly influencing image processing based on acquisition strategies and technical choices. Building on this, we developed MeMos, a compact hardware-software device for capturing and documenting data provenance in real-time. Currently deployed in large-scale 3D digitisation, MeMos is also adapted for field activities like material sampling and instrumental measurements. It integrates data into the CIDOC-CRM model, standardising interpretations of activities, actors, and contexts related to the studied object.

Spatialising scientific observations and related data

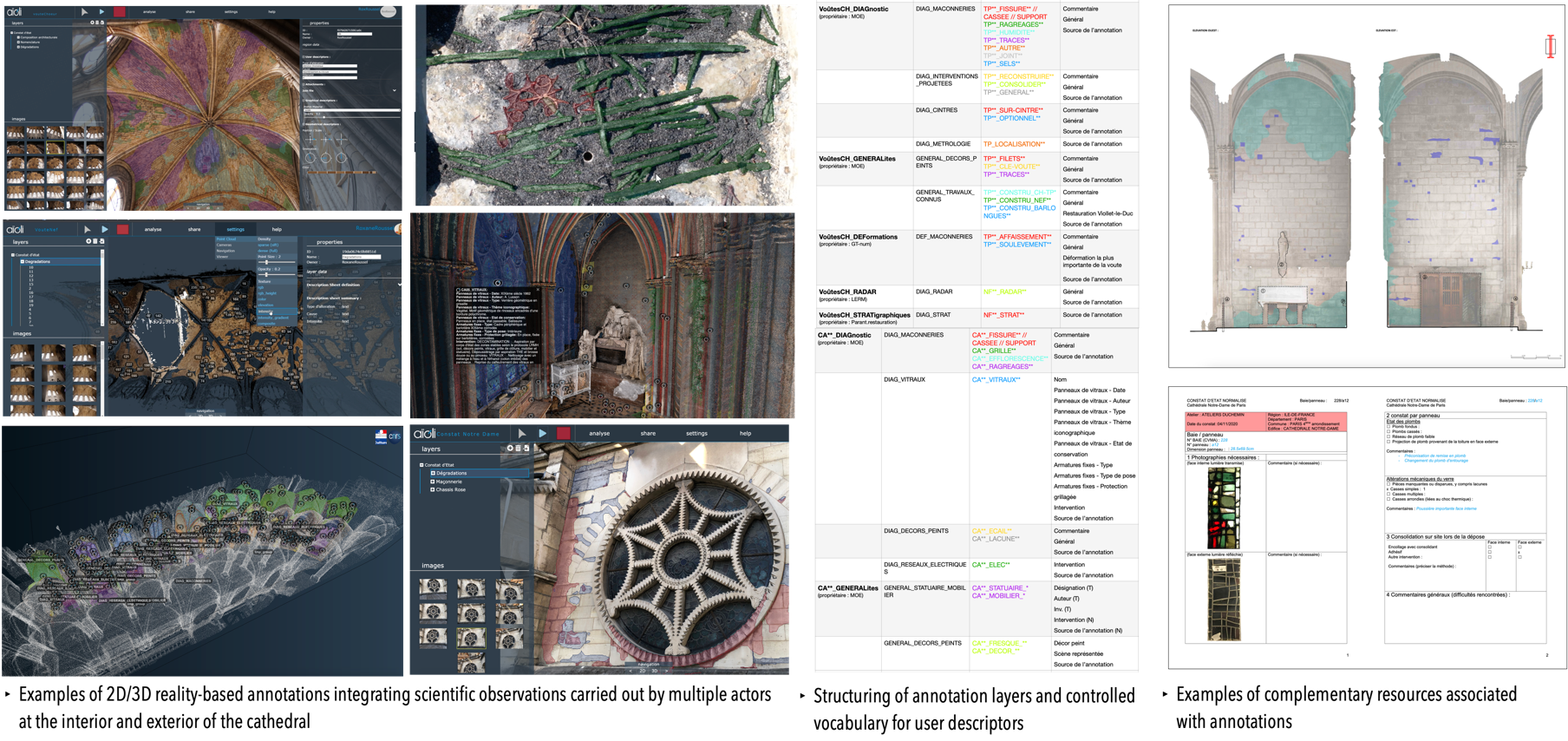

Another fundamental aspect of our research concerns the transition from digital data to multidisciplinary knowledge. This involves integrating interpretive layers from the researchers studying and deriving insights from these objects within the digitizations of material objects. To achieve this, we conducted large-scale experiments with a 2D/3D annotation method using the Aioli platform, developed in my laboratory, to spatialize observations and related data.

This work allowed us to exploit tens of thousands of spatially organized photographs, representing multiple temporal states. We defined descriptive structures by thematic areas, integrated observations from various researchers, and paid particular attention to nomenclature and controlled vocabularies. The annotation corpus includes nearly 13,000 annotations within more than 300 collaborative projects.

Linking research protocols with spatialised observations

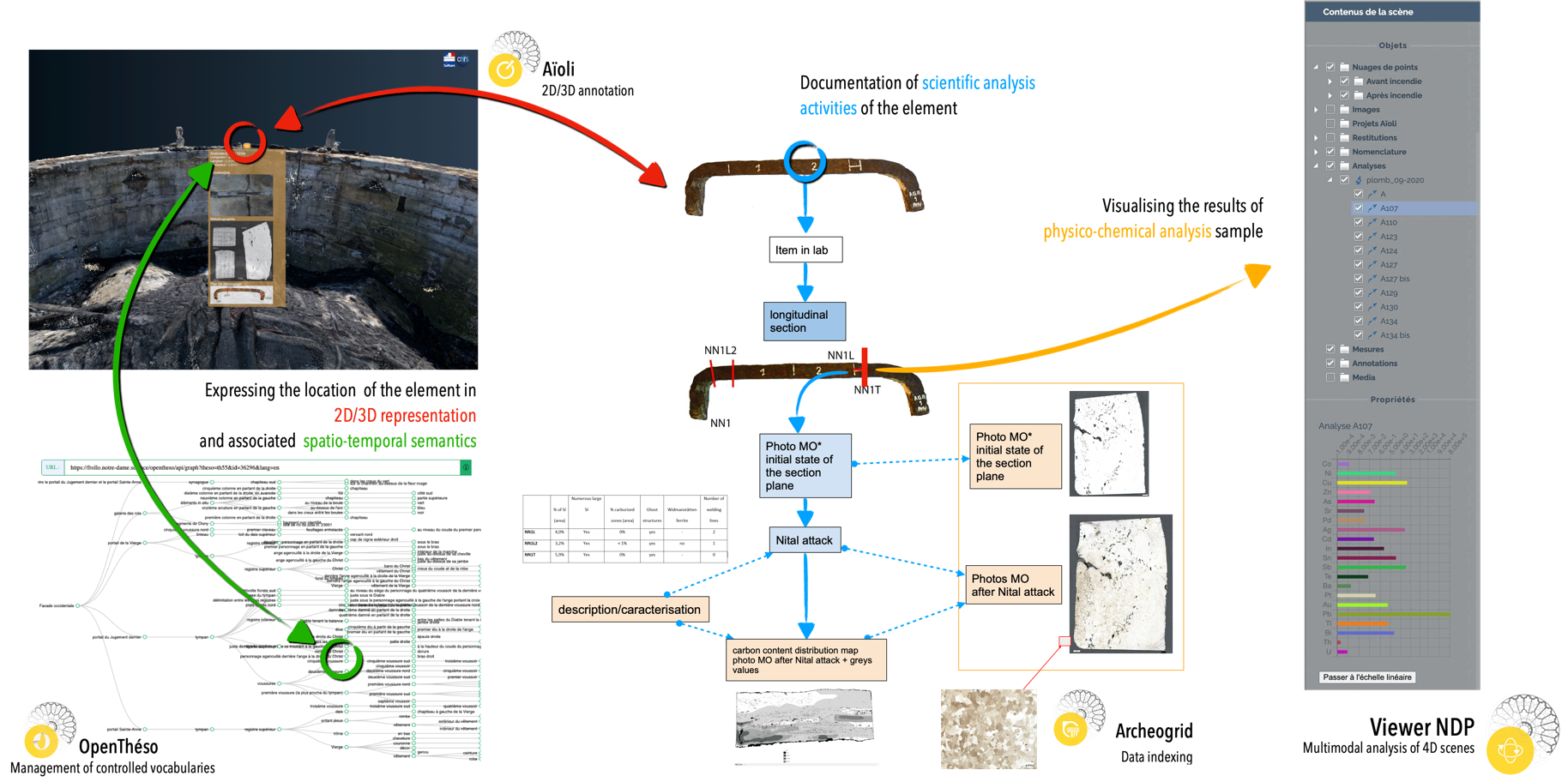

A consequent key research question is the relationship between the observable aspects of an object and the conceptual aspects we aim to formalise. Our goal is to link the digital representation of studied objects with the knowledge generated about them, creating a connection between digital data and its semantic expression (spanning from data provenance to research protocols).

For instance, this staple’s position can be expressed with precise 3D coordinates, but also through controlled vocabulary, indicating its location within a specific area of Notre-Dame in a textual source. Additionally, the documentation related to the scientific study of this staple, including cut planes and analytical operations, must be connected to a documentation structure that records the full analytical process, as shown on the right side of the slide.

Scientific narration of virtual reconstruction hypotheses and reasoning

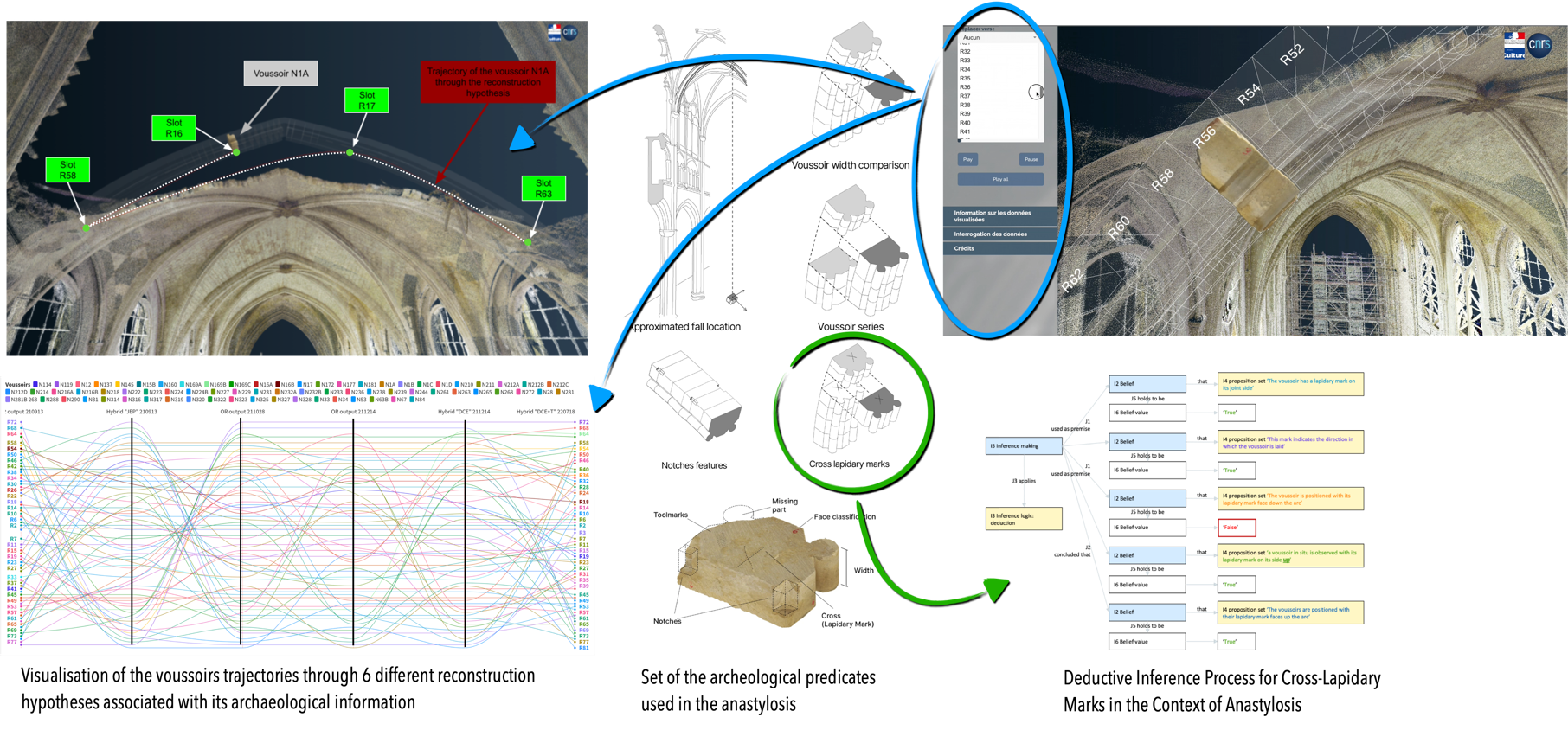

The anastylosis of the collapsed nave arch combined efforts from architects, archaeologists, art historians, material scientists, and computer scientists. Using 4D annotation, over 80 voussoirs were tracked through 3D images to locate and identify their positions post-recovery. Predicates such as fall position, dimensions, and lapidary marks guided both human and computer-assisted reasoning to reconstruct the arch. Hypotheses were tested digitally via a 3D viewer and validated on-site by physically reassembling the voussoirs. This process advanced knowledge of the arch’s structure, informed its restoration, and highlighted the dynamics of interdisciplinary scientific collaboration, now being formalized to trace the evolution of collective knowledge.

The accompanying diagram illustrates how structured reasoning was applied to understand the role of lapidary marks in positioning voussoirs during the anastylosis process. Initially, the team hypothesized that these marks indicated the orientation of the elements in the structure, proposing that they faced downward toward the arch. However, observing a voussoir in situ during restoration, with its mark facing upward, led the team to adjust their approach, orienting all marks upwards. A dedicated 3D viewer supported this collaborative process by documenting the key steps of the anastylosis, capturing six primary reconstruction solutions developed over a year. This tool allowed visualization of the voussoirs’ trajectories through multiple hypotheses, recording their transitions from potential placements to the final consensus. The interplay between digital models and physical assembly provided a detailed trace of the team’s evolving reasoning.

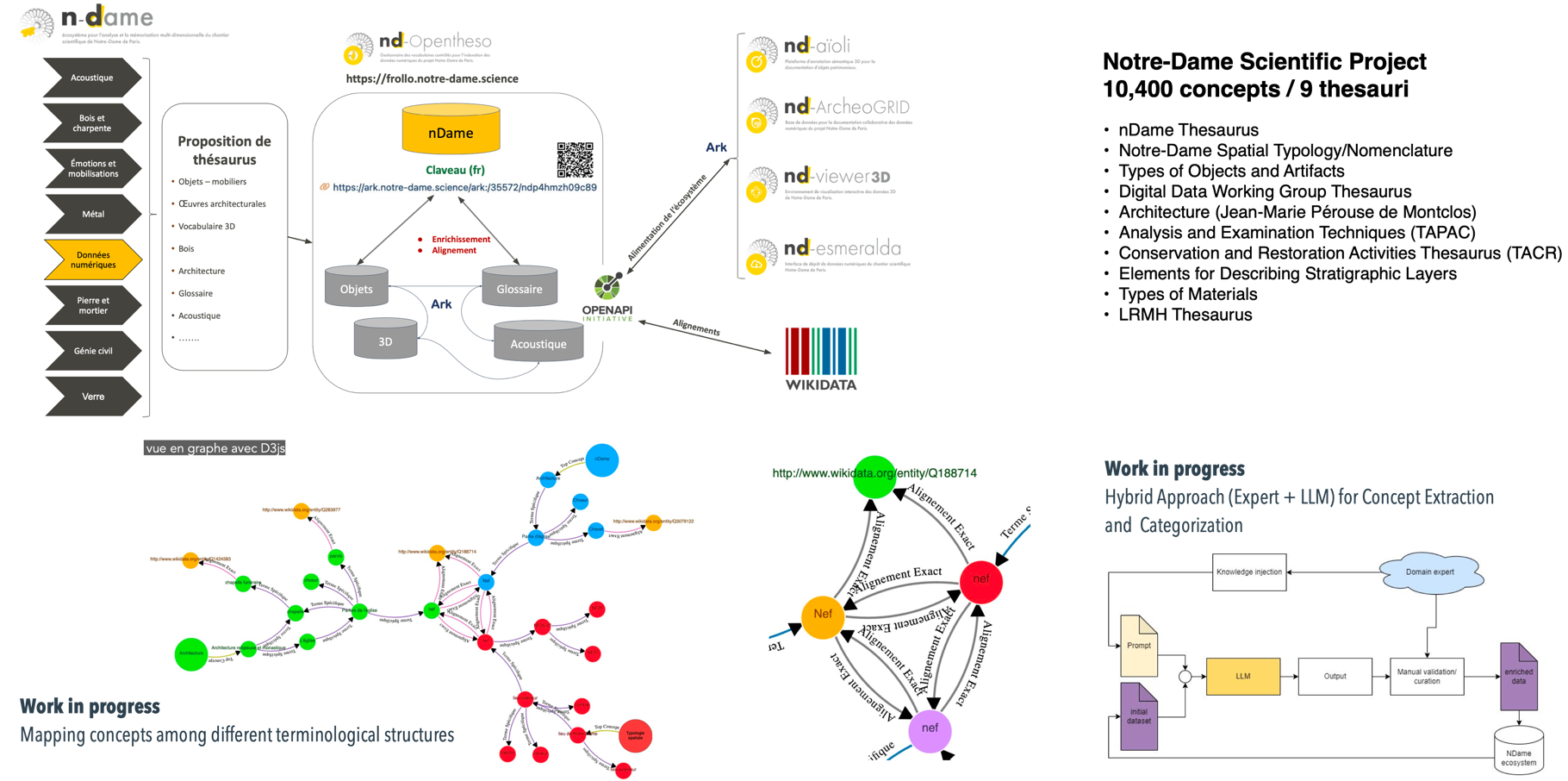

A corpus of interconnected data through multiple dimensions

Vocabulary terms are central to connecting data within our project, which has enabled the creation of a “cathedral of concepts.” OpenTheso plays a vital role, aggregating over 10,000 concepts into nine thesauri—covering materials, techniques, instruments, and actors—curated collaboratively in SKOS format. In addition to the top-down approach, where domain-specific terms are contributed, we are exploring a bottom-up method using NLP and large language models (e.g., BART, LAMA, ChatGPT) to extract and suggest new concepts from textual data. A graph-based concept mapping engine in OpenTheso aligns terms across structures, enabling cross-disciplinary connections and advancing knowledge integration.

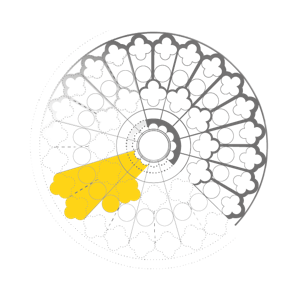

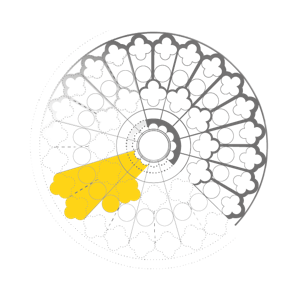

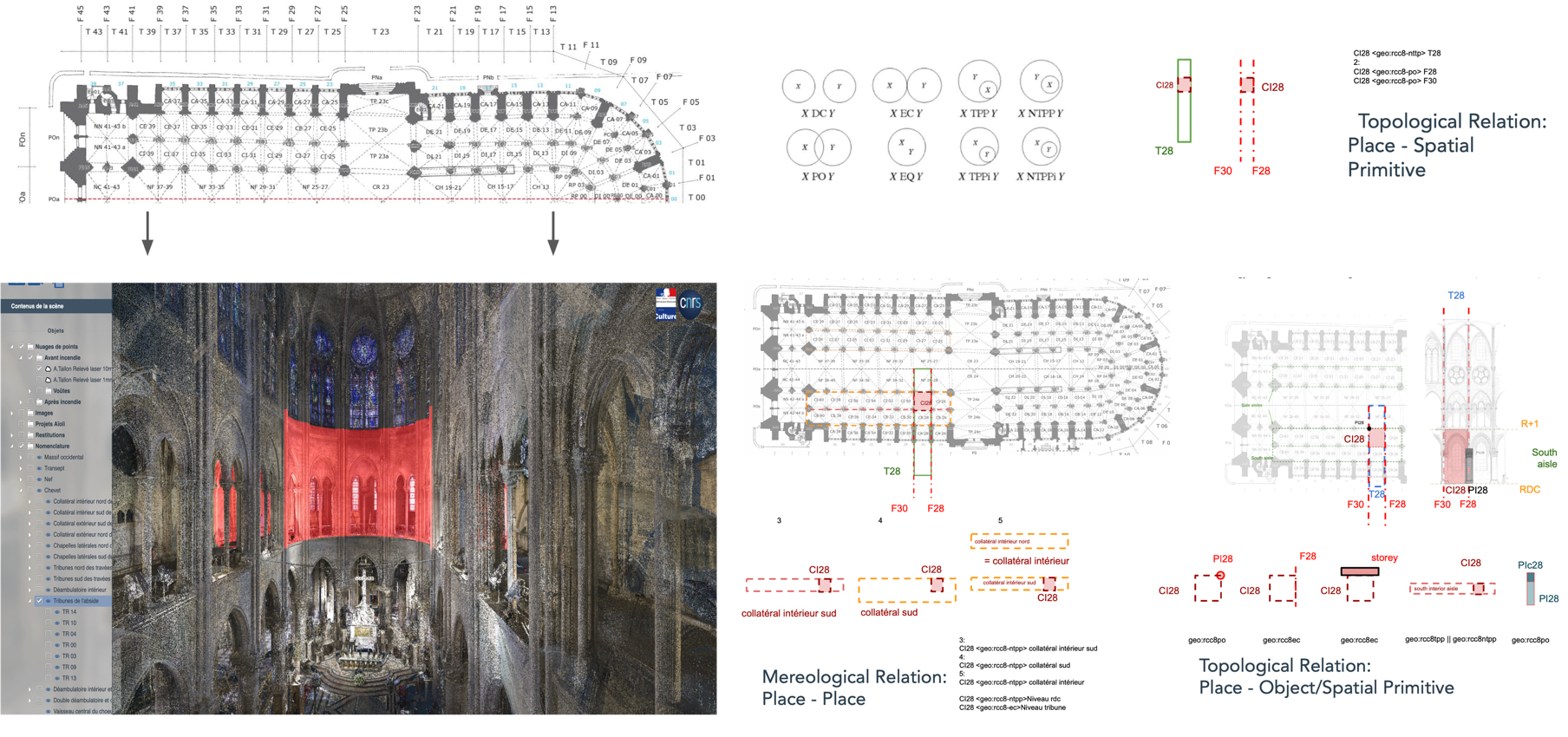

Modelling topological and mereological relationships between spatial entities

Beyond vocabulary, the spatial structure of Notre-Dame serves as an anchor for connecting data. A semantic model was developed to represent topological and mereological relationships between spatial entities, positioning data within the architectural framework through spatial and architectural vocabulary. Using a hierarchical tree of spatial semantic concepts, the 3D representation is segmented into spatial envelopes, such as bounding boxes, to organize data by associating semantic attributes with specific sections of the cathedral.

A graph further links spatial units through relationships like internal, external, or proximity connections, enabling structural associations, such as linking entities in bay T28 to related elements. This approach allows the cathedral’s architecture to propagate semantic attributes, contextualizing each element spatially. For instance, a 3D annotation in a chapel’s spatial envelope automatically connects to related spatial concepts through these defined relationships.

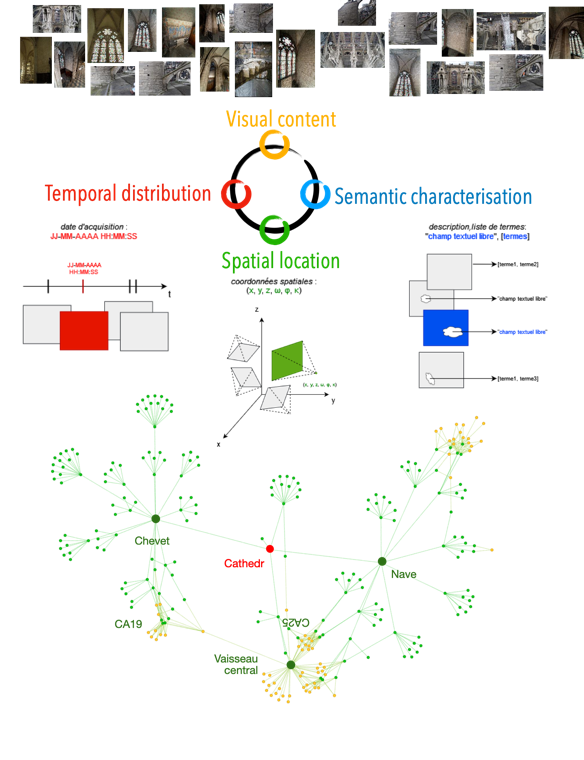

Image classification based on visual, spatio-temporal, and semantic criteria

This approach enables new ways to utilize an interconnected corpus, particularly for classifying the extensive photographic collection. By leveraging attributes from annotations, proximity across multiple dimensions is used for automatic classification. Visual similarity is addressed through computer vision algorithms that group images by shared features. Spatially, photographs are linked to specific areas of the cathedral using spatial semantics. Temporally, EXIF metadata organizes images on a timeline, reflecting restoration activities or the building’s evolution. Semantically, annotations enrich the images with structured vocabulary terms.

//////

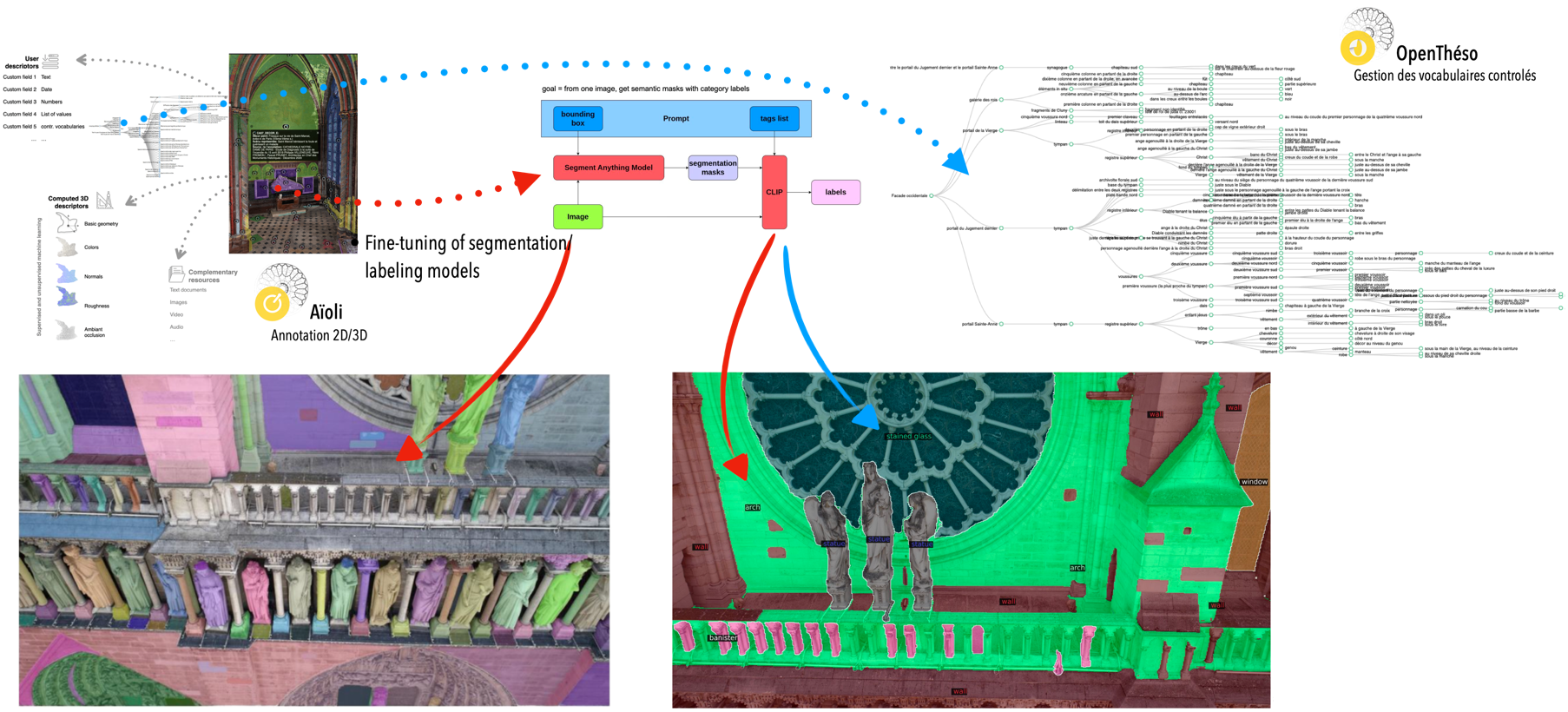

Experimenting with deep learning

Recently, thanks to the Jean Zay supercomputing facility at CNRS, we’ve adopted an exploratory approach to enhancing our semantic annotations through AI and deep learning. This new approach involves automating image segmentation and incorporating shape recognition. Current technologies allow us to identify shapes that models have been trained on, but our challenge is leveraging the vast collection of 2D/3D annotations and vocabulary terms we've gathered to effectively train these models.

For example, we are experimenting with models like SAM (segment anything model) and CLIP (Contrastive Language-Image Pretraining), to generate segmentation masks on images, which we then link to semantic labels from our thesaurus. The goal is to refine these models so that they can not only recognize relevant shapes but also incorporate contextual information—such as material deterioration or decorative elements—based on our extensive annotations.